Negative-Ion Influence on Precipitation

Preamble

This document is being developed by the Senior Engineer of AscenTrust, LLc. at the request of Mr. Arun Savkur for the benefit of WeatherTec.

The WeatherTec Ionization Technology has a proven track record in increasing the annual average amount of precipitation in the land area adjacent to the Emitter. The negative-ions produced at the Emitter, injected into the atmosphere, rise to the convective cloud layer acting as Cloud Condensation Nuclei, to increase natural rainfall development.

The Senior Engineer has been tasked with the development of a mathematical model linking the onset of precipitation, within an identifiable land area surrounding the Electrodynamic Device, which wil be referred to as the Emitter, on the surface layer of the planetary boundary layer, to the local electrodynamic State of the Fair Weather Voltage Gradient to the lower cloud layer of the troposphere. This model will introduce thermodynamic, statistical mechanical, fluid mechanics,turbulence and vortex theory into the conceptual framework for a realistic atmospheric model of the posibility of enhancing the amount of precipitation within the footprint of our point source of Negative-Ions.

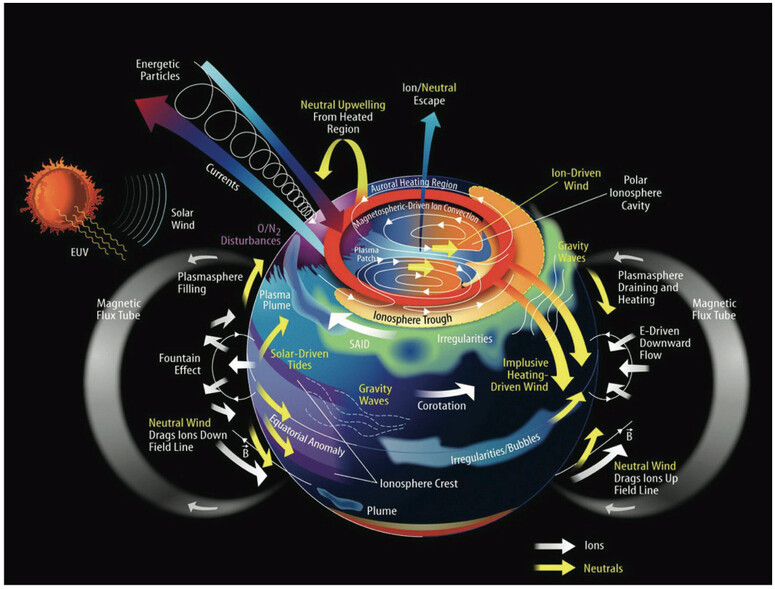

1. The Senior Engineer is a Graduate of Electrical and Computing Engineering and Graduated with distinction in 1969. His thesis topic, for his masters degree, was the development of a theoretical model of non-linear laser interactions, to promote ion heating in magnetized plasma. It is therefore natural that the Senior Engineer would concentrate his efforts on the interactions of the Global Electrical Circuit, fair Weather Electrostatic Gradient and the diurnal behavior of the magnetosphere, to provide the theoretical background for the interaction of negative-ions with fair-weather clouds to enhance precipitation at the footprint of the Planetary Boundary Layer

2. Definition of terms in meteorology and atmospheric physics is variable and inconsistant. In order to mitigate this problem we will be using the Glossary of Meteorology belonging to the American Meteorology Society.

3. This document is being developed to provide the theoretical and mathematical basis supporting the use of the negative-ions Emitter technology belonging to Weathertec to increase precipitation. The models being developed include thermodynamic and electrodynamic modeling

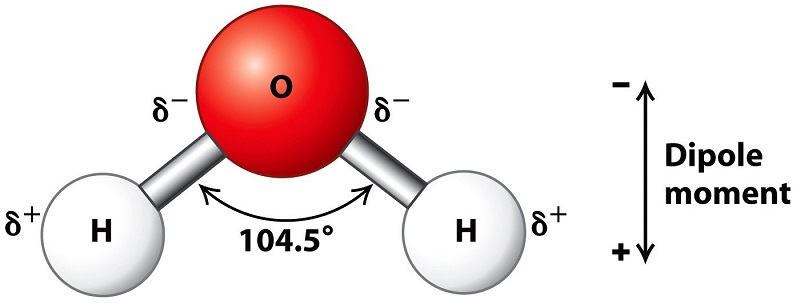

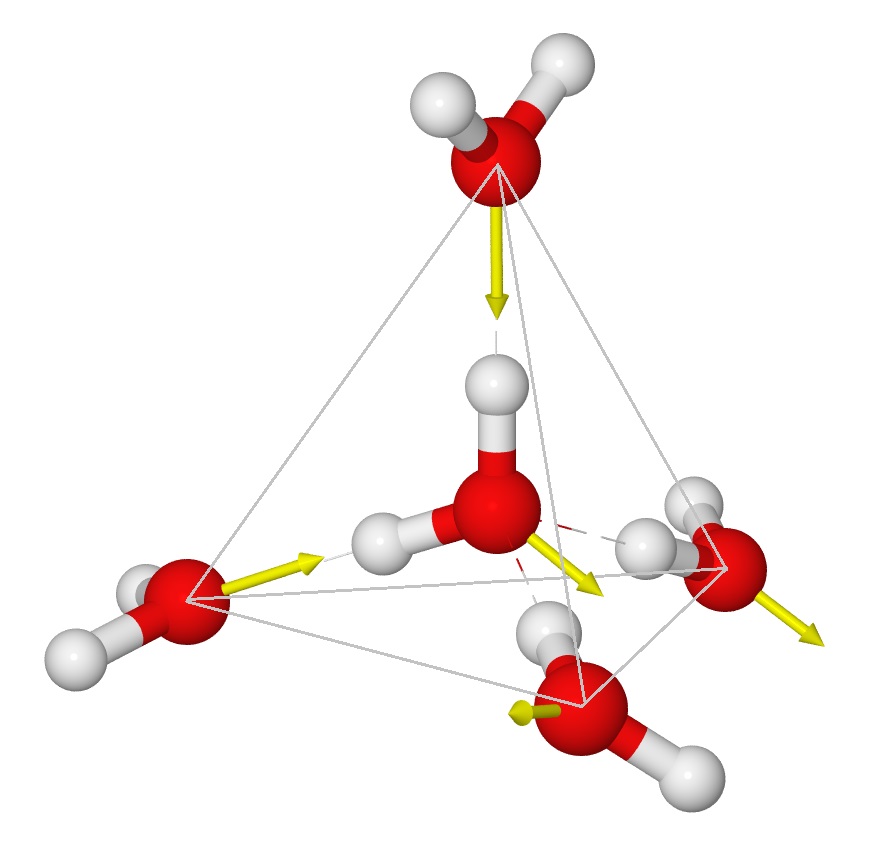

4. This document will provide a fairly extensive introduction to the dipole structure of the water moleacule and the increase in individual dipole moment, of the water moleacule in chemically bonded series of water moleacules. The polar characteristics of water are one of the most important properties of the water moleacule. The dipolar structure of the water moleacule is responsible for the large surface tension ascribed to a water droplet that allows the water aerosol to form clouds.

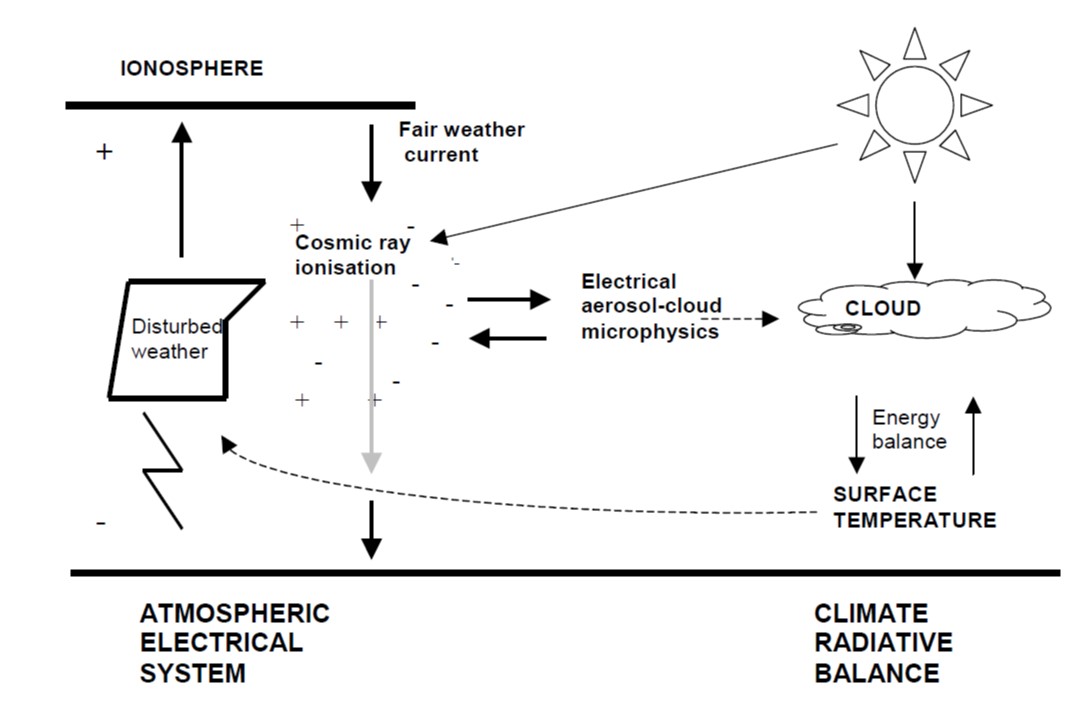

5. This document includes an introduction to the subjects of the Global Electrical Circuit, the Fair Weather Voltage Gradient and Atmospheric Electrodynamic. Atmospheric electrification is responsible for the creation of charged Cloud Condensation Nuclei. These CCN are responsible for the creation of Thunderstorms. The Weathertec Emitter creates an negative-ion aerosol at the surface of the earth to modify the local micrometeorological Microphysics, and thereby create precipitation.

6. This webpage is in a state of ongoing development and new material will appear from time to time as the workload permits.

7. The text and mathematical equations in this document are renderred in HTML using a web-based Latex and Javascript renderring engine.

Part One: Models and Reality

The Academic idea that modelling and simulations can bring us to an understanding of reality is false. Certainly, modelling has become an essential and inseparable part of many scientific disciplines, each of which has its own ideas about specific types of modelling. The following was said by John von Neumann:

... the sciences do not try to explain, they hardly even try to interpret, they mainly make models. By a model is meant a mathematical construct which, with the addition of certain verbal interpretations, describes observed phenomena. The justification of such a mathematical construct is solely and precisely that it is expected to work—that is, correctly to describe phenomena from a reasonably wide area.

A scientific model seeks to represent natural phenomena, and physical processes in a logical and objective way. All models are simplified simulation of reality that, despite being approximations, can be extremely useful. Building and disputing models is fundamental to the Academic enterprise. Complete and true representation are impossible, but Academics debate often concerns which is the better model for a given task.

Attempts are made by Academics to formalize the principles of the empirical sciences in the same way logicians axiomatize the principles of logic. The aim of these attempts is to construct a formal system that will not produce theoretical consequences that are contrary to what is found in reality. Predictions or other statements drawn from such a formal system mirror or map the real world only insofar as these scientific models are true.

For the atmospheric scientist, the global models used are rendered in software algorithms to simulate, visualize and gain intuition about the atmospheric phenomenon, or process being represented.

Part 1.1: Mathematical Models

A mathematical model is an abstract description of a concrete system using mathematical concepts and language. The process of developing a mathematical model is termed mathematical modeling. Mathematical models are used extensively in atmospheric physics, in the natural sciences and in engineering.

Mathematical models can take many forms, including dynamical systems, statistical models or differential equations. These and other types of models can overlap, with a given model involving a variety of abstract structures. In general, mathematical models may include logical models. In many cases, the quality of a scientific field depends on how well the mathematical models developed on the theoretical side agree with results of repeatable experiments. Lack of agreement between theoretical mathematical models and experimental measurements often leads to important advances as better theories are developed.

In the physical sciences, a traditional mathematical model contains most of the following elements:

- Governing equations

- Supplementary sub-models

- Defining equations

- Constitutive equations

- Assumptions and constraints

- Initial and boundary conditions

- Classical constraints and kinematic equations

Part 1.2: Mathematical Model Classification

Linear Vs. Nonlinear: If all the operators in a mathematical model exhibit linearity, the resulting mathematical model is defined as linear. A model is considered to be nonlinear otherwise. The definition of linearity and nonlinearity is dependent on context, and linear models may have nonlinear expressions in them. For example, in a statistical linear model, it is assumed that a relationship is linear in the parameters, but it may be nonlinear in the predictor variables. Similarly, a differential equation is said to be linear if it can be written with linear differential operators, but it can still have nonlinear expressions in it. In a mathematical programming model, if the objective functions and constraints are represented entirely by linear equations, then the model is regarded as a linear model. If one or more of the objective functions or constraints are represented with a nonlinear equation, then the model is known as a nonlinear model.

Linear Structure: implies that a problem can be decomposed into simpler parts that can be treated independently and/or analyzed at a different scale and the results obtained will remain valid for the initial problem when recomposed and rescaled.

Nonlinearity: Nonlinearity, even in fairly simple systems, is often associated with phenomena such as chaos and irreversibility. Although there are exceptions, nonlinear systems and models tend to be more difficult to study than linear ones. A common approach to nonlinear problems is linearization, but this can be problematic if one is trying to study aspects such as irreversibility, which are strongly tied to nonlinearity.

Static Vs. Dynamic: A dynamic model accounts for time-dependent changes in the state of the system, while a static (or steady-state) model calculates the system in equilibrium, and thus is time-invariant. Dynamic models typically are represented by differential equations or difference equations.

Explicit Vs. Implicit: If all of the input parameters of the overall model are known, and the output parameters can be calculated by a finite series of computations, the model is said to be explicit. But sometimes it is the output parameters which are known, and the corresponding inputs must be solved for by an iterative procedure, such as Newton's method or Broyden's method. In such a case the model is said to be implicit. For example, a jet engine's physical properties such as turbine and nozzle throat areas can be explicitly calculated given a design thermodynamic cycle (air and fuel flow rates, pressures, and temperatures) at a specific flight condition and power setting, but the engine's operating cycles at other flight conditions and power settings cannot be explicitly calculated from the constant physical properties.

Discrete Vs. Continuous: A discrete model treats objects as discrete, such as the particles in a molecular model or the states in a statistical model; while a continuous model represents the objects in a continuous manner, such as the velocity field of fluid in pipe flows, temperatures and stresses in a solid, and electric field that applies continuously over the entire model due to a point charge.

Deterministic Vs. probabilistic (stochastic): A deterministic model is one in which every set of variable states is uniquely determined by parameters in the model and by sets of previous states of these variables; therefore, a deterministic model always performs the same way for a given set of initial conditions. Conversely, in a stochastic model—usually called a "statistical model"—randomness is present, and variable states are not described by unique values, but rather by probability distributions.

Deductive, inductive, or floating: A deductive model is a logical structure based on a theory. An inductive model arises from empirical findings and generalization from them. The floating model rests on neither theory nor observation, but is merely the invocation of expected structure. Application of mathematics in social sciences outside of economics has been criticized for unfounded models. Application of catastrophe theory in science has been characterized as a floating model.

Part Two: Prototypes

A prototype is the early physical model of a product built to test the theoretical validity of the model. It is a term used in a variety of contexts, including semantics, design, electronics, and software programming. A prototype is generally used to evaluate a new design to enhance precision by system analysts and users. Prototyping serves to provide specifications and acquire data for a real, working system rather than a theoretical one. In some design workflow models, creating a prototype (a process sometimes called materialization) is the step between the formalization and the evaluation of an idea. In the case of ionization of air and water moleacules we have developed several different prototypes for different applications

Prototypes explore the different aspects of an intended design:

1. A proof-of-principle prototype serves to verify some key functional aspects of the intended design, but usually does not have all the functionality of the final product.

2. A working prototype represents all or nearly all of the functionality of the final product.

3. A visual prototype represents the size and appearance, but not the functionality, of the intended design. A form study prototype is a preliminary type of visual prototype in which the geometric features of a design are emphasized, with less concern for color, texture, or other aspects of the final appearance.

4. A user experience prototype represents enough of the appearance and function of the product that it can be used for user research.

5. A functional prototype captures both function and appearance of the intended design, though it may be created with different techniques and even different scale from final design.

6. A paper prototype is a printed or hand-drawn representation of the user interface of a software product. Such prototypes are commonly used for early testing of a software design, and can be part of a software walkthrough to confirm design decisions before more costly levels of design effort are expended.

Part Three: Theoretical Model

This document was commmissioned to the elucidation of an old theory of water droplet nucleation put forward in the early part of the twentieth Century by the American Meteorologist, Charles Thomson Rees Wilson. Wilson was a Scottish physicist and meteorologist who won the Nobel Prize in Physics for his invention of the cloud chamber. Despite Wilson's great contribution to particle physics, he remained interested in atmospheric physics, specifically atmospheric electricity, for his entire career. Wilson developed the first theory of the electrification of thunderstorm clouds.

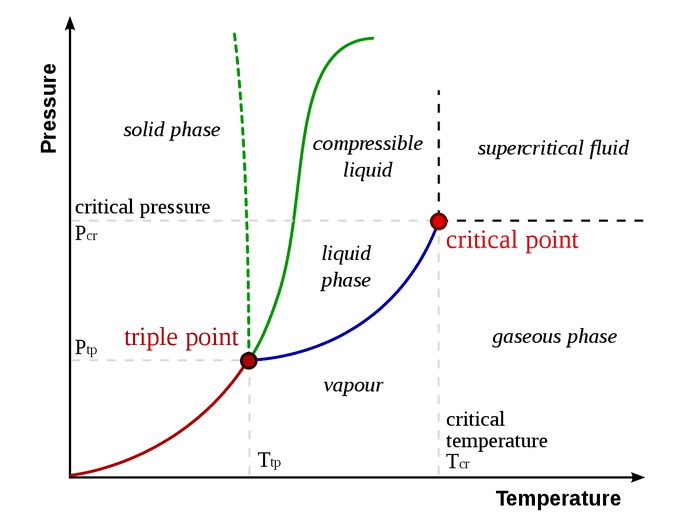

Clouds are formed by the lifting of damp air (from the planetary surface) which then cools Adiabatically by expansion as it encounters falling pressure at higher levels of the troposphere. The relative humidity consequently rises and eventually the air becomes saturated with water vapor. Further cooling produces a supersaturated vapor. In this supersaturated layer the water condenses on to aerosols, present in the upper troposphere. These aerosol can be carbon based (soot or flyash), silica based (sand), solvated sea salt (Sodium Chloride) or positively or negatively charged ions. The condensation of water vapor on these aerosols, suspended in the middle to upper troposphere, forms a cloud composed of minute water droplets.

Classical models of cloud seeding are based on the injection of aerosols above or below the cloud layer. These aerosols, generally consisting of sea salt, sulphates, silver iodine or dry ice (Carbon dioxide).

Our Weather Modification Technology creates negative-ions aerosols at the surface layer of the Planetary boundary layer of the atmosphere. These aerosols are then delivered to the underside of the saturated layer of the fair weather cloud.

The physics of our model will be centered around the thermodynamic and the electrodynamic interaction of clouds with the global Electrical Circuit and its manifestation in the Earth Boundary Layer.

The three important most important components required for the dynamics of water droplet formation are water, aerosols and ions.

Our analyses of the microphysics of the atmospheric surface boundary layer surrounding our negative ion emitter will include the physics of the corona discharge from a high voltage source. The is methodology for the creation of raindrops in the upper part of the troposphere

This document will concentrate on the electrodynamic interaction of water moleacules with aerosols in the formation of the nucleation sphere required to create water droplets in the upper part of the troposphere

At the Surface Layerof the Planetary Boundary Layer the microphysics of the prototype will include the interaction of the corona electrons required to create negative ions adjacent to the prototype. From the buildup of negative ions at the footprint of the prototype we will present the point source physics for the migration of the negative ions by convective and electric potential forces to the upper layer of the troposphere.

Part Four: Summary of Document

Section One: A brief introduction to Weather Systems with an emphasis on the interactions of local ionization on micrometerology. Our negative-ion Emitter is located on the surface of the earth, in what is commonly referred to the Surface Layer of the Planetary Boundary Layer. The geographic location of the emitter defines the geographic center of the area which we will refer to as the Zone of Precipitation of our weather modification atmospheric model.

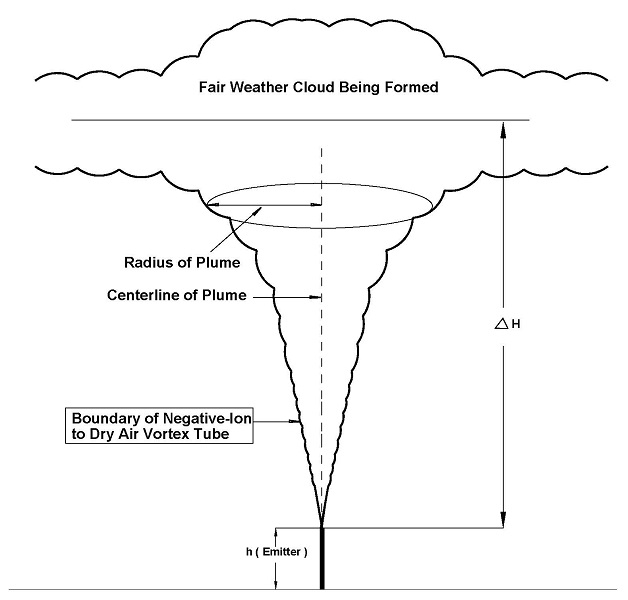

This document will include enough of the elements of mathematical physics to allow the reader to follow the mathematical modeling, both at large scale and at the microscale or local scale and to understand the theoretical modeling of our Weather Modification Technology. We will be discussing the physics of negative-ion creation on the surface of the earth and the interaction of these negative-ion within the planetary boundary layer. The interaction of negative-ions with the surface voltage gradient and the planetary magnetic field at the boundary layer creates a vortex. The creation and maintenance of the vortex attached to the boundary layer of the earth creates a negative-ion aerosol plume. The aerosol plume rises to the cloud formation layer of the troposphere where the negatively charged ions form the central core of Cloud Condensation Nuclei.

Section Two: Introduction to Thermodynamic Concepts

The thermodynamic concepts which we are interested in are: Internal Energy, enthalpy, Entropy, Helmholtz Free Energy, Gibbs Free Energy, etc. These thermodynamic properties will be introduced as belonging to a thermodynamic system. The thermodynamic properties of the system define the State of the system. This introduction to thermodynamic concepts will be done under the ideal notion of a Closed Systems. Only the thermodynamic properties of perfect gases will be addressed in this section with the clear understanding that all systems considered in atmospheric thermodynamics are open systems and are therefore difficult to analyse using these classic thermodynamic concepts.

Section Three: Introduction to Classical Mechanics

The scope of this short introduction to classical mechanics will be kept within the narrow range of the mechanical laws which serve as an introduction to hydrodynamics, quantum mechanical, electrodynamic, magnetohydrodynamic and statistical mechanical theories of the atmosphere and more particularily to cloud physics and the circulation models of the oceans and the atmosphere. The classical mechanical concepts which we are interested in are: Neutonian Mechanics, Lagrangian Mechanics and Hamiltonian Mechanics

Section Four: Introduction to Hydrodynamics

The defining property of fluids, including atmospheric gases and water, lies in the ease in which they can be deformed. The fact that relative motion of different elements of a portion of fluid can, and in general does, occur when forces act on the fluid gives rise to the study of hydrodynamics. In this section we will outline some elementary concepts of fluid mechanics which is pertinent to atmospheric studies. In addition we will discuss conservation laws, including mass, momentum and energy. We will also introduce the Lagrangian and Eulerian specifications. Finally we will introduce concepts such as Newtonian versus non-newtonian fluids and we will introduce the concept of turbulent flow in the atmosphere.

Section Five: Introduction to Electrodynamics

The surface of the earth is a good conductor of electricity. The ocean, because of the inclusion of sodium chloride, an electrolyte, has a conductivity which is three orders of magnitude higher that the surfaace conductivity. We are therefore required to include a brief introduction to electrostatics, magnetostatic and electrodynamics to a sufficient level to grasp the main arguments concerning the creation of Charged Aerosols in the troposphere. These Cloud Condensation Nuclei form the core concept of nucleation which leads to precipitation.

Section Six: Introduction to magnetohydrodynamics

which is pertinent to atmospheric studies. The magnetohydrodynamic forces of the solar winds are extremely important in the creation and maintenance of the Global Electrical Circuit. We will also be concerned with the geomagnetic influence on thunderstorms.

Section Seven: Introduction to Electrochemistry

This section contains an analysis of those aspects of electrochemistry which are required to understand the essentials of the electrochemical interaction at the interface of the emitter to atmosphere and to follow the pathway to the creation of the small Aerosols responsible for the creation of cloud condensation nuclei.

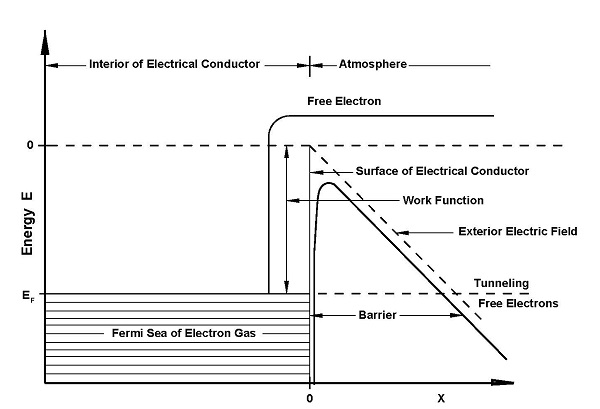

Section Eight: Introduction to the fundamentals of Quantum Mechanics

Many of the theoretical components of our modelling involve quantum Mechanical models. This section is a brief overview of the quantum mechanical concepts required for a proper analysis of the interaction of electrons with atmospheric Oxygen for the initial formation of Aerosols at the planetary surface layer.

Section Nine: Introduction to Surface States

This section contains an outline of the Basic Theory of Surface States. The analysis will quantum mechanical,thermodynamic, electrodynamic and electrochemical properties of the surface states of liquid water. These surface states are responsible for surface tension at the interface of water and the atmosphere.

Section Ten: A concise introduction to Nanoclusters and Microparticles the nature and dynamical properties of Aerosols. An aerosol is defined as a suspension system of solid or liquid particles in a gas. In this document we will be discussing the interactions of aerosols with the planetary electrical circuit in the consolidation of water droplets to create precipitation.

Section Eleven: A detailed introduction to the properties of water. This section contains a detailed analysis of the thermodynamic, electrodynamic and electrochemical properties of the water moleacule which makes this highly polar moleaculer one of the most important and unique substance in the biosphere.

Section Twelve: A detailed discussion of Aerosols. We will be discussing the nature and dynamical properties of Aerosols. An aerosol is defined as a suspension system of solid or liquid particles in a gas. In this document we will be discussing the interactions of aerosols with the planetary electrical circuit in the consolidation of water droplets to create precipitation.

Section Thirteen: consists of an introduction to Statistical Physics and Statistical Mechanics as a precursor to our study of turbulence and the theory of Vorticies

Section Fourteen: consists of an introduction to the topics related to the earth. These topics include the analysis of the earth as a Geoid, the earth's gravitation field, the earth's magnetic field and the earth's static electric gradient.

Section Fifteen: consists of an introduction to the earth's atmosphere. This introduction includes the general clasification of the layers of the atmosphere. The atmosphere will be discussed in terms of the troposphere, the stratosphere, the mesosphere, the thermosphere, the exosphere and the ionosphere. We will also include a brief discussion of the ozone layer, the homosphere, the hydrosphere and the earth's boundary layer.

Section Sixteen: consists of an introduction to Turbulence. Turbulence or turbulent flow is fluid motion characterized by chaotic changes in pressure and flow velocity. All interactions in the atmosphere are classified as turbulent. The creation of vortices in the planetary boundary layer is a very important theoretical part of our model.

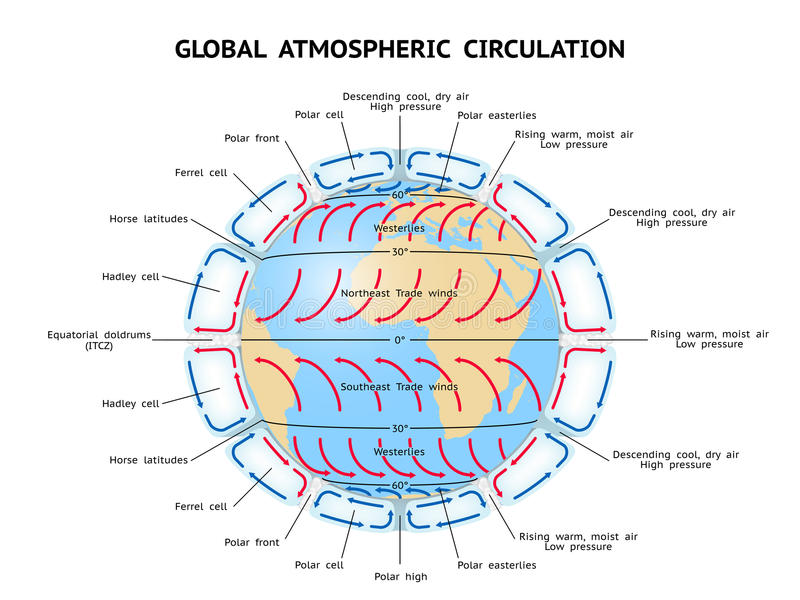

Section Seventeen: consists of an introduction to Dynamic meteorology. Dynamic meteorology is the study of those motions of the atmosphere that are associated with the production of the circulation models, including the dynamics of air and moisture. For all such motions the discrete molecular nature of the atmosphere can be ignored, and the atmosphere can be regarded as a continuous fluid medium.

Section Eighteen: consists of an introduction to the existing models of hydrodynamic turbulence. We will only outline the models which are useful for the elucidation of our theoretical model of enhancing precipitation through negative-ion interactions in the base of low level clouds comenly referred to as fair weather clouds.

Section Nineteen: consists of a preliminary discussion of two of the most important planetary processes which are involved in the creation of clouds, precipitation and thunderstorms. The first is referred to as Global Circulation Model and the second is Global Electrical Circuit and the final part will refer to the Planetary Magnetohydrodynamic System.

Section Twenty: consists of an introduction to Clouds, their classification and their role in precipitation. Terrestrial clouds can be found throughout most of the homosphere, which includes the troposphere, stratosphere, and mesosphere. Within these layers of the atmosphere, air can become saturated as a result of being cooled to its dew point or by having moisture added from an adjacent source. The moisture then coalesces to become droplets of water. These droplets are the major constituent of clouds.

Section Twenty-One: consists of an introduction to Electrical effects on Fair Weather Clouds have been proposed to occur via the ion-assisted formation of ultra-fine aerosol.

Section Twenty-Two: consists of an introduction to Cloud Condensation Nuclei (CCNs). CCN are small particles typically 0.2 µm, or one hundredth the size of a cloud droplet on which water vapour begins to condense to form a droplet. These cloud condensation nuclei then form the basis for the formation of raindrops in the upper part of the Troposphere. When the droplets reach a size of $ {\displaystyle 100 \mu} $ they fall to earth as precipitation.

Section Twenty-Three: consists of a discussion of the different processes by which nuclear droplets may attain radii of several microns and so form a cloud. We will discuss the diffusion of water vapour to and its condensation upon their surface, and coalescence of droplets relative to each other by virtue of Brownian Motion, small-scale turbulance, electrical forces, and differential rates of fall under gravity.

Section Twenty-Four: consists of an introduction to the Planetary Boundary Layer and the Surface Boundary Layer which form the lower part of the atmosphere in direct contact to the earth. It is in the surface layer of the atmospheric boundary layer that our prototypes injects negative ions into a vortex tube (aerosol plume) which allows the ions to migrated upwards and interact with the layer of low clouds to enhance the production of CCN and therefore increase the probability of presipitation.

Section Twenty-Five: consists of an introduction to The first prototype produces free electrons through corona discharge from a high-voltage copper cathode.

Section Twenty-Six: consists of an introduction to Flow of aerosols in the form of negative-ions, charged water droplets, vapor or smoke released into the air at a very small altitude in surface layer of the planetary boundary layer. Plumes are of considerable importance in the atmospheric dispersion modelling of aerosols comenly refered to as air pollution. There are three primary types of aerosol emission plumes:

Section One: Introduction to Weather Systems and Micrometerology

Weather is the state of the atmosphere, describing for example the degree to which it is hot or cold, wet or dry, calm or stormy, clear or cloudy. On Earth, most weather phenomena occur in the lowest layer of the planet's atmosphere, the Troposphere, just below the Stratosphere. Weather refers to day-to-day temperature, precipitation, and other atmospheric conditions, whereas climate is the term for the averaging of atmospheric conditions over longer periods of time.

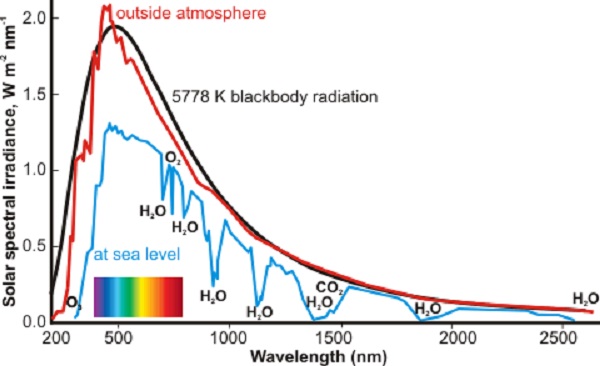

Earth's Energy Budget accounts for the balance between the energy that Earth receives from the Sun and the energy the Earth loses back into outer space. Smaller energy sources, such as Earth's internal heat, are taken into consideration, but make a tiny contribution compared to solar energy. The energy budget also accounts for how energy moves through the climate system. Because the Sun heats the equatorial tropics more than the polar regions, received solar irradiance is unevenly distributed. As the energy seeks equilibrium across the planet, it drives interactions in Earth's climate system, i.e., Earth's water, ice, atmosphere, rocky crust, and all living things. The result is the Earth's weather system.

The Solar Wind is a stream of charged particles released from the upper atmosphere of the Sun, called the corona. This plasma mostly consists of electrons, protons and alpha particles with kinetic energy between 0.5 and 10 keV. The composition of the solar wind plasma also includes a mixture of materials found in the solar plasma: trace amounts of heavy ions and atomic nuclei. Superimposed with the solar-wind plasma is the interplanetary magnetic field. The solar wind varies in density, temperature and speed over time and over solar latitude and longitude. Its particles can escape the Sun's gravity because of their high energy resulting from the high temperature of the corona, which in turn is a result of the coronal magnetic field. The boundary separating the corona from the solar wind is called the Alfvén surface.

At a distance of more than a few solar radii from the Sun, the solar wind reaches speeds of 250–750 km/s and is supersonic, meaning it moves faster than the speed of the fast magnetosonic wave. The flow of the solar wind is no longer supersonic at the termination shock. Other related phenomena include the aurora (northern and southern lights), the plasma tails of comets that always point away from the Sun, and geomagnetic storms that can change the direction of magnetic field lines.

Atmospheric motions are characterized by a variety of scales ranging from the order of millimeter to as large as the circumference of the earth at the planetary layer. The corresponding time scales range from: seconds, hours, diurnal or daily and yearly. These scales of motion are generally classified as: micro-, meso-, and macroscales. Sometimes, terms such as local, regional and global are used to characterize the atmospheric scales and the phenomena associated with them.

This document will be mainly concerned with atmospheric physics at the microscale or local scale and we are therefore working in the scope of Micrometeorology.

Micrometeorology is a branch of meteorology which deals with atmospheric phenomena and processes at the lower end of the spectrum of atmospheric physics, which are variously characterized as microscale, small-scale or local-scale processes.

There is a large body of historical research linking the creation of water droplets, in the troposphere, to the existance of negative and positive ions. The existance of ions in the troposphere creates a physical links among clouds, the global atmospheric electrical circuit and cosmic ray ionisation.

The global circuit extends throughout the atmosphere from the planetary surface to the lower layers of the ionosphere. Cosmic rays are the principal source of atmospheric ions away from the continental boundary layer: the ions formed permit a vertical conduction current to flow in the fair weather part of the global circuit. Through the (inverse) solar modulation of cosmic rays, the resulting columnar ionisation changes may allow the global circuit to convey a solar influence to meteorological phenomena of the lower atmosphere.

Electrical effects on non-thunderstorm clouds have been been extensively studied by the Russians and the Germans who have substantially ellucidated the mechanism of ion-assisted formation of ultrafine aerosol, which can grow to sizes able to act as cloud condensation nuclei, or through the increased ice nucleation capability of charged aerosols.

Even small atmospheric electrical modulations on the aerosol size distribution can affect cloud properties and modify the radiative balance of the atmosphere, through changes communicated locally by the atmospheric electrical circuit.

Section Two: Thermodynamics

Our interest in cloud thermodynamics has to do with our approach to the physics of water droplet formation via negative ion creation in the planetary boundary layer and the transport of these ions to the upper part of the troposphere, to form Cloud Condensation Nuclei for condensation of water vapor into water droplets. The thermodynamic function which we will be mainly interested in is the Gibbs Free Energy and we will use the Gibbs free energy to justify the thermodynamic processes which will be outlined below.

When a system is at equilibrium under a given set of conditions, it is said to be in a definite thermodynamic state. The state of the system can be described by a number of state quantities that do not depend on the process by which the system arrived at its state. They are called intensive variables or extensive variables according to how they change when the size of the system changes. The properties of the system can be described by an equation of state which specifies the relationship between these variables. State may be thought of as the instantaneous quantitative description of a system with a set number of variables held constant.

A thermodynamic process may be defined as the energetic evolution of a thermodynamic system proceeding from an initial state to a final state. It can be described by process quantities. Typically, each thermodynamic process is distinguished from other processes in energetic character according to what parameters, such as temperature, pressure, or volume, etc., are held fixed; Furthermore, it is useful to group these processes into pairs, in which each variable held constant is one member of a conjugate pair.

Thermodynamic Processes Important in Atmospheric Physics are:

1. Adiabatic process: occurs without loss or gain of energy by heat Transfer

2. Isenthalpic process: occurs at a constant enthalpy

3. Isentropic process: occurs at constant entropy

4. Isobaric process: occurs at constant pressure

5. Isothermal process: occurs at a constant temperature

6. Steady state process: occurs without a change in the internal energy

Thermodynamic potentials are different quantitative measures of the stored energy in a system. Potentials are used to measure the energy changes in systems as they evolve from an initial state to a final state. The potential used depends on the constraints of the system, such as constant temperature or pressure.

For example, the Gibbs Free energy will be used almost exclusively in our analysis of the thermodynamics of droplet creation and growth in the clouds.

Part 2.1: Background

The use of thermodynamic ideas, in the study of weather and climate, allows us to quantify the movement of energy within the oceans and the atmosphere of the earth. This part of the document will provide an overview of the basic laws of thermodynamics and the thermodynamics processes which we will be using in this document.

We will first state the two laws of thermodynamics which are pertinent to our discussion of the micrometeorology, of the surface area surrounding the negative ion weather modification device, created within the planetary Boundary Layer.

The first law of thermodynamics

The first law of thermodynamics is essentially a version of the law of conservation of energy, adapted for thermodynamic processes. In general, the conservation law states that the total energy of an isolated system is constant; energy can be transformed from one form to another, but can be neither created nor destroyed.

In a closed system (i.e. there is no transfer of matter into or out of the system), the first law states that the change in internal energy of the system (ΔU system) is equal to the difference between the heat supplied to the system (Q) and the work (W) done by the system on its surroundings.

$${\displaystyle { \Delta U_{\rm {system}}=Q-W \label{eq1}}} $$The First Law asserts the existence of a state variable which is usually called: Internal Energy. This internal energy, $ {\displaystyle U} $ along with the volume, $ {\displaystyle V} $ of the system and the mole number, $ {\displaystyle N_i} $ of its chemical constituents will characterizes the macroscopic properties of the systems equilibrium states.

The Second law of thermodynamics

In a reversible or quasi-static, idealized process of transfer of energy as heat to a closed thermodynamic system of interest, (which allows the entry or exit of energy – but not transfer of matter), from an auxiliary thermodynamic system, an infinitesimal increment ($ {\displaystyle \mathrm {d} S}$ ) in the entropy of the system of interest is defined to result from an infinitesimal transfer of heat ( $ {\displaystyle \delta Q}$ ) to the system of interest, divided by the thermodynamic temperature $ {\displaystyle (T)} $ of the system and the auxiliary thermodynamic system:

$${\displaystyle \mathrm {d} S={\frac {\delta Q}{T}}\qquad\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,{\text{(closed system; idealized, reversible process)}}.}$$A convenient form of the second law, useful in atmospheric physics, is to state that for any equilibrium system there exists a state function called entropy, $ {\displaystyle S} $ and that this entropy has the property that it is a function of the extensive parameters of a composite system, such that:

$$ {\displaystyle S = S(U, V, N_1 , . . . , N_ r )} $$Where $ {\displaystyle N_i} $ denotes the mole number of the ith constituent. We further assume that the entropy is additive over the constituent subsystems, that it is continuous and differentiable and a monotonically increasing function of the internal energy, $ {\displaystyle U} $ .

We further assume that in the absence of internal constraints the value of the extensive parameters in equilibrium are those that maximize the entropy.

Part 2.2: Fundamental Relations

Are four fundamental equations which demonstrate how the four most important thermodynamic quantities depend on variables that can be controlled and measured experimentally. Thus, they are essentially equations of state, and using the fundamental equations, experimental data can be used to determine sought-after quantities. The relation is generally expressed as a microscopic change in internal energy in terms of microscopic changes in entropy, and volume for a closed system in thermal equilibrium in the following way. $$ {\displaystyle \mathrm {d} U=T\,\mathrm {d} S-P\,\mathrm {d} V\,} $$ Where, $ {\displaystyle U} $ is internal energy, $ {\displaystyle T} $ is absolute temperature, $ {\displaystyle S} $ is entropy, $ {\displaystyle P} $ is pressure, and $ {\displaystyle V} $ is volume.

This is only one expression of the fundamental thermodynamic relation. It may be expressed in other ways, using different variables (e.g. using thermodynamic potentials). For example, the fundamental relation may be expressed in terms of the enthalpy as: $$ {\displaystyle \mathrm {d} H=T\,\mathrm {d} S+V\,\mathrm {d} P\,} $$ In terms of the Helmholtz free energy ($ {\displaystyle F} $ ) as: $$ {\displaystyle \mathrm {d} F=-S\,\mathrm {d} T-P\,\mathrm {d} V\,} $$ and in terms of the Gibbs free energy ($ {\displaystyle G} $ ) as:

$$ {\displaystyle \mathrm {d} G=-S\,\mathrm {d} T+V\,\mathrm {d} P\,} $$Part 2.2.1: Internal Energy

The properties of entropy outlined above, ensure that the entropy function can be used to define the Internal Energy, such that:

$$ {\displaystyle U = U(S, V, N_1 , . . . , N_ r )} $$This expression is sometimes called the Fundamental Relation in energy form. In specific (per unit mole, or unit mass) form, it can be written as:

$$ {\displaystyle u = u(s, v, n_1 , . . . , n_r ) } $$Where $ {\displaystyle n_i = {\frac {N_j}{{\mathrm \sum_j} N_j}}} $ is the mole fraction.

$$ {\displaystyle {\frac {\mathrm {d} P}{\mathrm {d} T}}={\frac {PL}{T^{2}R}}} $$It then follows that:

$$ {\displaystyle \mathrm{d}U = ( \partial U / \partial S)_{V,N_i} dS + (\partial U / \partial V)_{S,N_i} dV + \sum_i (\partial U / \partial N_i)_{S,V} dN_i } $$Part 2.2.2: Entropy

According to the Clausius equality, for a closed homogeneous system, in which only reversible processes take place, $$ {\displaystyle \oint {\frac {\delta Q}{T}}=0.} $$ With $ {\displaystyle T } $ being the uniform temperature of the closed system and $ {\displaystyle \delta Q } $ the incremental reversible transfer of heat energy into that system. That means the line integral $ {\textstyle \int _{L}{\frac {\delta Q}{T}}} $ is path-independent.

A state function $ {\displaystyle S } $ , called entropy, may be defined which satisfies: $$ {\displaystyle \mathrm {d} S={\frac {\delta Q}{T}}.} $$ Entropy Measurement: The thermodynamic state of a uniform closed system is determined by its temperature $ {\displaystyle T } $ and pressure $ {\displaystyle P } $ . A change in entropy can be written as: $$ {\displaystyle \mathrm {d} S=\left({\frac {\partial S}{\partial T}}\right)_{P}\mathrm {d} T+\left({\frac {\partial S}{\partial P}}\right)_{T}\mathrm {d} P.} $$ The first contribution depends on the heat capacity at constant pressure $ {\displaystyle C_P } $ through: $$ {\displaystyle \left({\frac {\partial S}{\partial T}}\right)_{P}={\frac {C_{P}}{T}}.} $$

This is the result of the definition of the heat capacity by $ {\displaystyle \delta Q = C_P dT } $ and $ {\displaystyle T dS = \delta Q } $ . The second term may be rewritten with one of the Maxwell relations:

$$ {\displaystyle \left({\frac {\partial S}{\partial P}}\right)_{T}=-\left({\frac {\partial V}{\partial T}}\right)_{P}} $$And the definition of the volumetric thermal-expansion coefficient: $$ {\displaystyle \alpha _{V}={\frac {1}{V}}\left({\frac {\partial V}{\partial T}}\right)_{P}} $$

So that:

$$ {\displaystyle \mathrm {d} S={\frac {C_{P}}{T}}\mathrm {d} T-\alpha _{V}V\mathrm {d} P.} $$With this expression the entropy $ {\displaystyle S } $ at arbitrary $ {\displaystyle P } $ and $ {\displaystyle T } $ can be related to the entropy $ {\displaystyle S_0 } $ at some reference state at $ {\displaystyle P_0 } $ and $ {\displaystyle T_0 } $ according to:

$$ {\displaystyle S(P,T)=S(P_{0},T_{0})+\int _{T_{0}}^{T}{\frac {C_{P}(P_{0},T^{\prime })}{T^{\prime }}}\mathrm {d} T^{\prime }-\int _{P_{0}}^{P}\alpha _{V}(P^{\prime },T)V(P^{\prime },T)\mathrm {d} P^{\prime }.} $$In classical thermodynamics, the entropy of the reference state can be put equal to zero at any convenient temperature and pressure.

$ {\displaystyle S(P, T) } $ is determined by followed a specific path in the $ {\displaystyle P-T } $ diagram: integration over $ {\displaystyle T } $ at constant pressure $ {\displaystyle P_0 } $ , so that $ {\displaystyle dP = 0 } $ , and in the second integral one integrates over $ {\displaystyle P } $ at constant temperature $ {\displaystyle T } $ , so that $ {\displaystyle dT = 0 } $ . As the entropy is a function of state the result is independent of the path.

The above relation shows that the determination of the entropy requires knowledge of the heat capacity and the equation of state. Normally these are complicated functions and numerical integration is needed.

The entropy of inhomogeneous systems is the sum of the entropies of the various subsystems. The laws of thermodynamics can be used for inhomogeneous systems even though they may be far from internal equilibrium. The only condition is that the thermodynamic parameters of the composing subsystems are (reasonably) well-defined.

Part 2.2.3: Enthalpy

The enthalpy $ {\displaystyle H} $ of a thermodynamic system is defined as the sum of its internal energy and the product of its pressure and volume:

$$ {\displaystyle H = U + pV} $$Where $ {\displaystyle U} $ is the internal energy, $ {\displaystyle p} $ is pressure, and $ {\displaystyle V} $ is the volume of the system.

Enthalpy is an extensive property; it is proportional to the size of the system (for homogeneous systems). As intensive properties, the specific enthalpy $ {\displaystyle h = H/m} $ is referenced to a unit of mass $ {\displaystyle m} $ of the system, and the molar enthalpy $ {\displaystyle H_n} $ is $ {\displaystyle H/n} $ , where $ {\displaystyle n} $ is the number of moles. For inhomogeneous systems the enthalpy is the sum of the enthalpies of the component subsystems:

$$ {\displaystyle H=\sum _{k}H_{k},} $$Where:

- $ {\displaystyle H } $ is the total enthalpy of all the subsystems,

- $ {\displaystyle k } $ refers to the various subsystems,

- $ {\displaystyle H_k } $ refers to the enthalpy of each subsystem,

A closed system may lie in thermodynamic equilibrium in a static gravitational field, so that its pressure $ {\displaystyle p} $ varies continuously with altitude, while, because of the equilibrium requirement, its temperature $ {\displaystyle T} $ is invariant with altitude. (Correspondingly, the system's gravitational potential energy density also varies with altitude.) Then the enthalpy summation becomes an integral:

$$ {\displaystyle H=\int (\rho_h)\,dV,} $$Where:

- $ {\displaystyle \rho } $ is density (mass per unit volume),

- $ {\displaystyle h } $ is the specific enthalpy (enthalpy per unit mass),

- $ {\displaystyle (\rho_h) } $ represents the enthalpy density (enthalpy per unit volume),

- $ {\displaystyle dV } $ denotes an infinitesimally small element of volume within the system,

The integral therefore represents the sum of the enthalpies of all the elements of the volume.

The enthalpy of a closed homogeneous system is its energy function $ {\displaystyle H(S,p) } $ , with its entropy $ {\displaystyle S[p] } $ and its pressure $ {\displaystyle p } $ as natural state variables which provide a differential relation for $ {\displaystyle dH } $ of the simplest form, derived as follows.

We start from the first law of thermodynamics for closed systems for an infinitesimal process:

$$ {\displaystyle dU=\delta Q-\delta W,} $$Where:

- $ {\displaystyle \delta Q } $ is a small amount of heat added to the system,

- $ {\displaystyle \delta W } $ is a small amount of work performed by the system.

In a homogeneous system in which only reversible processes or pure heat transfer are considered, the second law of thermodynamics gives $ {\displaystyle \delta Q = T dS } $ , with $ {\displaystyle T } $ the absolute temperature and $ {\displaystyle dS } $ the infinitesimal change in entropy $ {\displaystyle S } $ of the system. Furthermore, if only $ {\displaystyle pV } $ work is done, $ {\displaystyle \delta W = p dV } $ . As a result:

$$ {\displaystyle dU=T\,dS-p\,dV.} $$Adding $ {\displaystyle d(pV) } $ to both sides of this expression gives:

$$ {\displaystyle dU+d(pV)=T\,dS-p\,dV+d(pV),} $$Or:

$$ {\displaystyle d(U+pV)=T\,dS+V\,dp.} $$So:

$$ {\displaystyle dH(S,p)=T\,dS+V\,dp.} $$And the coefficients of the natural variable differentials $ {\displaystyle dS } $ and $ {\displaystyle dp } $ are just the single variables $ {\displaystyle T } $ and $ {\displaystyle V } $ .

Part 2.2.4: Helmhotz Free Energy

Definition: The Helmholtz free energy is defined as:

$$ {\displaystyle F\equiv U-TS,} $$Where:

- $ {\displaystyle F } $ is the Helmholtz free energy (SI: joules),

- $ {\displaystyle U } $ is the internal energy of the system (SI: joules),

- $ {\displaystyle T } $ is the absolute temperature of the surroundings,

- $ {\displaystyle S } $ is the entropy of the system (SI: joules per kelvin).

The Helmholtz energy is the Legendre transformation of the internal energy $ {\displaystyle U,}$ in which temperature replaces entropy as the independent variable.

The first law of thermodynamics in a closed system provides:

$$ {\displaystyle \mathrm {d} U=\delta Q\ +\delta W} $$Where $ {\displaystyle U } $ is the internal energy, $ {\displaystyle \delta Q} $ is the energy added as heat, and $ {\displaystyle \delta W} $ is the work done on the system. The second law of thermodynamics for a reversible process yields $ {\displaystyle \delta Q=T\,\mathrm {d} S} $ . In case of a reversible change, the work done can be expressed as $ {\displaystyle \delta W=-p\,\mathrm {d} V} $

and so:

$$ {\displaystyle \mathrm {d} U=T\,\mathrm {d} S-p\,\mathrm {d} V.} $$Applying the product rule for differentiation to $ {\displaystyle \mathrm {d} (TS)=T\mathrm {d} S\,+S\mathrm {d} T} $ , it follows:

$$ {\displaystyle \mathrm {d} U=\mathrm {d} (TS)-S\,\mathrm {d} T-p\,\mathrm {d} V,} $$and

$$ {\displaystyle \mathrm {d} (U-TS)=-S\,\mathrm {d} T-p\,\mathrm {d} V.} $$The definition of $ {\displaystyle F=U-TS} $ enables to rewrite this as:

$$ {\displaystyle \mathrm {d} F=-S\,\mathrm {d} T-p\,\mathrm {d} V.} $$Because $ {\displaystyle F } $ is a thermodynamic function of state, this relation is also valid for a process (without electrical work or composition change) that is not reversible.

Part 2.2.5: Gibbs Free Energy

The Gibbs free energy (symbol $ {\displaystyle G} $ ) is a thermodynamic potential that can be used to calculate the maximum amount of work that may be performed by a thermodynamically closed system at constant temperature and pressure. It also provides a necessary condition for atmospheric processes such as cloud formation, droplet electrification and quantify the onset of precipitation in clouds.

The Gibbs free energy change

$$ {\displaystyle \Delta G=\Delta H-T\Delta S} $$Measured in joules (in SI units) is the maximum amount of non-expansion work that can be extracted from a closed system (one that can exchange heat and work with its surroundings, but not matter) at fixed temperature and pressure. This maximum can be attained only in a completely reversible process. When a system transforms reversibly from an initial state to a final state under these conditions, the decrease in Gibbs free energy equals the work done by the system to its surroundings, minus the work of the pressure forces.

The Gibbs energy is the thermodynamic potential that is minimized when a system reaches chemical equilibrium at constant pressure and temperature when not driven by an applied electrolytic voltage. Its derivative with respect to the reaction coordinate of the system then vanishes at the equilibrium point. As such, a reduction in $ {\displaystyle G} $ is necessary for a reaction to be spontaneous under these conditions.

The initial state of the body, according to Gibbs, is supposed to be such that "the body can be made to pass from it to states of dissipated energy by reversible processes". If the reactants and products are all in their thermodynamic standard states, then the defining equation is written as $ {\displaystyle \Delta G^{\circ }=\Delta H^{\circ }-T\Delta S^{\circ }} $ , where $ {\displaystyle H} $ is enthalpy, $ {\displaystyle T} $ is absolute temperature, and $ {\displaystyle S} $ is entropy.

The Gibbs free energy is defined as:

$$ {\displaystyle G(p,T)=U+pV-TS,} $$

which is the same as:

$$ {\displaystyle G(p,T)=H-TS,} $$

where: The expression for the infinitesimal reversible change in the Gibbs free energy as a function of its "natural variables" $ {\displaystyle p} $ and $ {\displaystyle T} $ , for an open system, subjected to the operation of external forces (for instance, electrical or magnetic), which cause the external parameters of the system to change: The structure of Maxwell relations is a statement of equality among the second derivatives for continuous functions. It follows directly from the fact that the order of differentiation of an analytic function of two variables is irrelevant. In the case of Maxwell relations the function considered is a thermodynamic potential and $ {\displaystyle x_{i}} $ and $ {\displaystyle x_{j}} $ are two different natural variables for that potential. The differential form of internal energy $ {\displaystyle U} $ is:

$$ {\displaystyle dU=T\,dS-P\,dV} $$

This equation resembles total differentials of the form:

$$ {\displaystyle dz=\left({\frac {\partial z}{\partial x}}\right)_{y}\!dx+\left({\frac {\partial z}{\partial y}}\right)_{x}\!dy} $$

For any equation of the form:

$$ {\displaystyle dz=M\,dx+N\,dy} $$

that:

$$ {\displaystyle M=\left({\frac {\partial z}{\partial x}}\right)_{y},\quad N=\left({\frac {\partial z}{\partial y}}\right)_{x}} $$

Consider, the equation $ {\displaystyle dU=T\,dS-P\,dV} $ . We can now immediately see that:

$$ {\displaystyle T=\left({\frac {\partial U}{\partial S}}\right)_{V},\quad -P=\left({\frac {\partial U}{\partial V}}\right)_{S}} $$

And for functions with continuous second derivatives, the mixed partial derivatives are identical, that is, that:

$$ {\displaystyle {\frac {\partial }{\partial y}}\left({\frac {\partial z}{\partial x}}\right)_{y}={\frac {\partial }{\partial x}}\left({\frac {\partial z}{\partial y}}\right)_{x}={\frac {\partial ^{2}z}{\partial y\partial x}}={\frac {\partial ^{2}z}{\partial x\partial y}}} $$

we therefore can see that:

$$ {\displaystyle {\frac {\partial }{\partial V}}\left({\frac {\partial U}{\partial S}}\right)_{V}={\frac {\partial }{\partial S}}\left({\frac {\partial U}{\partial V}}\right)_{S}} $$

and therefore that:

$$ {\displaystyle \left({\frac {\partial T}{\partial V}}\right)_{S}=-\left({\frac {\partial P}{\partial S}}\right)_{V}} $$

Derivation of Maxwell Relation from Helmholtz Free energy: The differential form of Helmholtz free energy is:

$$ {\displaystyle dF=-S\,dT-P\,dV} $$

$$ {\displaystyle -S=\left({\frac {\partial F}{\partial T}}\right)_{V},\quad -P=\left({\frac {\partial F}{\partial V}}\right)_{T}} $$

From symmetry of second derivatives:

$$ {\displaystyle {\frac {\partial }{\partial V}}\left({\frac {\partial F}{\partial T}}\right)_{V}={\frac {\partial }{\partial T}}\left({\frac {\partial F}{\partial V}}\right)_{T}} $$

and therefore that:

$$ {\displaystyle \left({\frac {\partial S}{\partial V}}\right)_{T}=\left({\frac {\partial P}{\partial T}}\right)_{V}} $$

The other two Maxwell relations can be derived from differential form of enthalpy $ {\displaystyle dH=T\,dS+V\,dP} $ and the differential form of Gibbs free energy $ {\displaystyle dG=V\,dP-S\,dT} $ in a similar way. So all Maxwell Relationships above follow from one of the Gibbs equations. General Maxwell Relationships: The relationships above are not the only relationships which can be written. When other work terms involving other natural variables besides the volume work are considered or when the number of particles is included as a natural variable, other Maxwell relations become apparent. For example, if we have a single-component gas, then the number of particles $ {\displaystyle N} $ is also a natural variable of the above four thermodynamic potentials. The Maxwell relationship for the enthalpy with respect to pressure and particle number would then be:

$$ {\displaystyle \left({\frac {\partial \mu }{\partial P}}\right)_{S,N}=\left({\frac {\partial V}{\partial N}}\right)_{S,P}\qquad ={\frac {\partial ^{2}H}{\partial P\partial N}}} $$

where $ {\displaystyle \mu} $ is the chemical potential. In addition, there are other thermodynamic potentials besides the four that are commonly used, and each of these potentials will yield a set of Maxwell relations. For example, the grand potential $ {\displaystyle \Omega (\mu ,V,T)} $ yields:

$$ {\displaystyle {\begin{aligned}\left({\frac {\partial N}{\partial V}}\right)_{\mu ,T}&=&\left({\frac {\partial P}{\partial \mu }}\right)_{V,T}&=&-{\frac {\partial ^{2}\Omega }{\partial \mu \partial V}}\\\left({\frac {\partial N}{\partial T}}\right)_{\mu ,V}&=&\left({\frac {\partial S}{\partial \mu }}\right)_{V,T}&=&-{\frac {\partial ^{2}\Omega }{\partial \mu \partial T}}\\\left({\frac {\partial P}{\partial T}}\right)_{\mu ,V}&=&\left({\frac {\partial S}{\partial V}}\right)_{\mu ,T}&=&-{\frac {\partial ^{2}\Omega }{\partial V\partial T}}\end{aligned}}} $$

Basic Definition: The heat capacity of an object, denoted by $ {\displaystyle C} $ , is the limit

$$ {\displaystyle C=\lim _{\Delta T\to 0}{\frac {\Delta Q}{\Delta T}},} $$

where $ {\displaystyle \Delta Q} $ is the amount of heat that must be added to the object (of mass $ {\displaystyle M} $ ) in order to raise its temperature by $ {\displaystyle \Delta T} $ .

The value of this parameter usually varies considerably depending on the starting temperature $ {\displaystyle T} $ of the object and the pressure $ {\displaystyle p} $ applied to it. In particular, it typically varies dramatically with phase transitions such as melting or vaporization. Therefore, it should be considered a function $ {\displaystyle C(p,T)} $ of those two variables. At constant temperature: No change in internal energy (as the temperature of the system is constant throughout the process) leads to only work done by the total supplied heat, and thus an infinite amount of heat is required to increase the temperature of the system by a unit temperature, leading to infinite or undefined heat capacity of the system. The heat capacity of water undergoing phase transition is infinite, because the heat is utilized in changing the state of the water rather than raising the overall temperature. Heterogeneous Atmosphere: The heat capacity for a heterogeneous atmosphere is well defined but may be difficult to measure. In many cases, the (isobaric) heat capacity of the atmosphere can be computed by simply adding together the (isobaric) heat capacities of the individual constituents. However, this computation is valid only when all components of the atmosphere are at the same external pressure before and after the measurement. That may not be possible in some cases. For example, when heating an amount of gas in the atmosphere, its volume and pressure will both increase, even if the atmospheric pressure outside is kept constant. Therefore, the effective heat capacity of the gas, in that situation, will have a value intermediate between its isobaric and isochoric capacities $ {\displaystyle C_{p}} $ and $ {\displaystyle C_{V}} $ . For complex thermodynamic systems with several interacting parts and state variables, or for measurement conditions that are neither constant pressure nor constant volume, or for situations where the temperature is significantly non-uniform, the simple definitions of heat capacity above are not useful or even meaningful. The heat energy that is supplied may end up as kinetic energy (energy of motion) and potential energy (energy stored in force fields), both at macroscopic and atomic scales. Then the change in temperature will depends on the particular path that the system followed through its phase space between the initial and final states. Namely, one must somehow specify how the positions, velocities, pressures, volumes, etc. changed between the initial and final states; and use the general tools of thermodynamics to predict the system's reaction to a small energy input. Latent heat (also known as latent energy or heat of transformation) is energy released or absorbed, by a body or a thermodynamic system, during a constant-temperature process—usually a first-order phase transition. Latent heat can be understood as hidden energy which is supplied or extracted to change the state of a substance (to melt or vaporize it) without changing its temperature or pressure. This includes the latent heat of fusion (solid to liquid), the latent heat of vaporization (liquid to gas) and the latent heat of sublimation (solid to gas). In Meteorology: latent heat flux is the flux of energy from the Earth's surface to the atmosphere that is associated with evaporation or transpiration of water at the surface and subsequent condensation of water vapor in the troposphere. It is an important component of Earth's surface energy transfer. For sublimation and deposition from and into ice, the specific latent heat is almost constant in the temperature range from −40 °C to 0 °C and can be approximated by the following empirical quadratic function: A process without transfer of heat to or from a system, so that $ {\displaystyle Q = 0} $ , is called adiabatic, and such a system is said to be adiabatically isolated. This simplifying assumption, frequently made in meteorology, is that a process is adiabatic. The assumption of adiabatic isolation is useful and often combined with other such idealizations to calculate a good first approximation of a system's behaviour. The formal definition of an adiabatic process is that heat transfer to the system is zero, $ {\displaystyle \delta Q = 0} $ . Then, according to the first law of thermodynamics:

$$ {\displaystyle dU+\delta W=\delta Q=0, \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.1)} $$

where $ {\displaystyle dU} $ is the change in the internal energy of the system and $ {\displaystyle \delta W} $ is work done by the system. Any work ($ {\displaystyle \delta W} $ ) done must be done at the expense of internal energy $ {\displaystyle U} $ , since no heat $ {\displaystyle \delta Q} $ is being supplied from the surroundings. Pressure–volume work $ {\displaystyle \delta W} $ done by the system is defined as:

$$ {\displaystyle \delta W=P\,dV. \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.2)} $$

However,$ {\displaystyle P} $ does not remain constant during an adiabatic process but instead changes along with $ {\displaystyle V} $ .

To calculate how the values of $ {\displaystyle dP} $ and $ {\displaystyle dV} $ relate to each other as the adiabatic process proceeds we must first recall ideal gas law $ {\displaystyle PV = nRT} $ ). The internal energy is given by:

$$ {\displaystyle U=\alpha nRT=\alpha PV, \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.3)} $$

where $ {\displaystyle \alpha} $ is the number of degrees of freedom divided by 2, $ {\displaystyle R} $ is the universal gas constant and $ {\displaystyle n} $ is the number of moles in the system.

Differentiating equation (2.6.3) yields:

$$ {\displaystyle dU=\alpha nR\,dT=\alpha \,d(PV)=\alpha (P\,dV+V\,dP). \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.4)} $$

This Equation is often expressed as $ {\displaystyle dU = nCVdT} $ because $ {\displaystyle CV = \alpha R} $ .

Now substitute equations (2.6.2) and (2.6.4) into equation (2.6.1) to obtain

$$ {\displaystyle -P\,dV=\alpha P\,dV+\alpha V\,dP, \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.5)} $$

factorize $ {\displaystyle -P\,dV} $ :

$$ {\displaystyle -(\alpha +1)P\,dV=\alpha V\,dP, \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.6)} $$

and divide both sides by $ {\displaystyle P\,V} $ :

$$ {\displaystyle -(\alpha +1){\frac {dV}{V}}=\alpha {\frac {dP}{P}}.\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.7)} $$

After integrating the left and right sides from $ {\displaystyle V_0} $ to $ {\displaystyle V} $ V and from $ {\displaystyle P_0} $ to $ {\displaystyle P} $ P and changing the sides respectively,

$$ {\displaystyle \ln \left({\frac {P}{P_{0}}}\right)=-{\frac {\alpha +1}{\alpha }}\ln \left({\frac {V}{V_{0}}}\right).\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.8)} $$

Exponentiate both sides, substitute $ {\displaystyle \frac {\alpha +1}{\alpha }} $ with $ {\displaystyle \gamma} $ , the heat capacity ratio:

$$ {\displaystyle \left({\frac {P}{P_{0}}}\right)=\left({\frac {V}{V_{0}}}\right)^{-\gamma },\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.9)} $$

and eliminate the negative sign to obtain

$$ {\displaystyle \left({\frac {P}{P_{0}}}\right)=\left({\frac {V_{0}}{V}}\right)^{\gamma }.\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.10)} $$

Therefore,

$$ {\displaystyle \left({\frac {P}{P_{0}}}\right)\left({\frac {V}{V_{0}}}\right)^{\gamma }=1, \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.11)} $$

and

$$ {\displaystyle P_{0}V_{0}^{\gamma }=PV^{\gamma }=\mathrm {constant}.\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.12)} $$

$$ {\displaystyle \Delta U=\alpha RnT_{2}-\alpha RnT_{1}=\alpha Rn\Delta T.\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.13)} $$ }

At the same time, the work done by the pressure–volume changes as a result from this process, is equal to:

$$ {\displaystyle W=\int _{V_{1}}^{V_{2}}P\,dV. \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.14)} $$

Since we require the process to be adiabatic, the following equation needs to be true

$$ {\displaystyle \Delta U+W=0. \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.15)} $$

By the previous derivation,

$$ {\displaystyle PV^{\gamma }={\text{constant}}=P_{1}V_{1}^{\gamma }.\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.16)} $$

Rearranging (2.6.16) gives

$$ {\displaystyle P=P_{1}\left({\frac {V_{1}}{V}}\right)^{\gamma }.\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.17)} $$

Substituting this into (2.6.14) gives

$$ {\displaystyle W=\int _{V_{1}}^{V_{2}}P_{1}\left({\frac {V_{1}}{V}}\right)^{\gamma }\,dV.\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.18)} $$

Integrating we obtain the expression for work:

$$ {\displaystyle W=P_{1}V_{1}^{\gamma }{\frac {V_{2}^{1-\gamma }-V_{1}^{1-\gamma }}{1-\gamma }}={\frac {P_{2}V_{2}-P_{1}V_{1}}{1-\gamma }}.\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.19)} $$ }

Substituting $ {\displaystyle \gamma = \frac {\alpha + 1}{\alpha}} $ in second term:

$$ {\displaystyle W=-\alpha P_{1}V_{1}^{\gamma }\left(V_{2}^{1-\gamma }-V_{1}^{1-\gamma }\right).\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.20)} $$

Rearranging,

$$ {\displaystyle W=-\alpha P_{1}V_{1}\left(\left({\frac {V_{2}}{V_{1}}}\right)^{1-\gamma }-1\right).\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.21)} $$

Using the ideal gas law and assuming a constant molar quantity (as often happens in practical cases),

$$ {\displaystyle W=-\alpha nRT_{1}\left(\left({\frac {V_{2}}{V_{1}}}\right)^{1-\gamma }-1\right).\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.22)} $$

By the continuous formula,

$$ {\displaystyle {\frac {P_{2}}{P_{1}}}=\left({\frac {V_{2}}{V_{1}}}\right)^{-\gamma },\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.23)} $$

or

$$ {\displaystyle \left({\frac {P_{2}}{P_{1}}}\right)^{-{\frac {1}{\gamma }}}={\frac {V_{2}}{V_{1}}}.\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.24)} $$

Substituting into the previous expression for $ {\displaystyle W} $ ,

$$ {\displaystyle W=-\alpha nRT_{1}\left(\left({\frac {P_{2}}{P_{1}}}\right)^{\frac {\gamma -1}{\gamma }}-1\right).\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.25)} $$

Substituting this expression and (2.6.13) in (2.6.15) gives

$$ {\displaystyle \alpha nR(T_{2}-T_{1})=\alpha nRT_{1}\left(\left({\frac {P_{2}}{P_{1}}}\right)^{\frac {\gamma -1}{\gamma }}-1\right).\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.26)} $$

Simplifying,

$$ {\displaystyle T_{2}-T_{1}=T_{1}\left(\left({\frac {P_{2}}{P_{1}}}\right)^{\frac {\gamma -1}{\gamma }}-1\right),\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.27)} $$

$$ {\displaystyle {\frac {T_{2}}{T_{1}}}-1=\left({\frac {P_{2}}{P_{1}}}\right)^{\frac {\gamma -1}{\gamma }}-1,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.28)} $$

$$ {\displaystyle T_{2}=T_{1}\left({\frac {P_{2}}{P_{1}}}\right)^{\frac {\gamma -1}{\gamma }}.\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,(2.6.29)} $$

The earliest formulation of classical mechanics is often referred to as Newtonian mechanics. It consists of the physical concepts based on foundational works of Sir Isaac Newton, and the mathematical methods invented by Gottfried Wilhelm Leibniz, Joseph-Louis Lagrange, Leonhard Euler, and other contemporaries in the 17th century to describe the motion of bodies under the influence of forces. Later, more abstract methods were developed, leading to the reformulations of classical mechanics known as Lagrangian mechanics and Hamiltonian mechanics. These advances, made predominantly in the 18th and 19th centuries, extend substantially beyond earlier works, particularly through their use of analytical mechanics. They are, with some modification, also used in all areas of modern physics and most certainly in atmospheric physics. Newton's laws of motion are three basic laws of classical mechanics that describe the relationship between the motion of an object and the forces acting on it. The mathematical description of motion, or kinematics, is based on the idea of specifying positions using numerical coordinates. Movement is represented by these numbers changing over time: a body's trajectory is represented by a function that assigns to each value of a time variable the values of all the position coordinates. If the body's location as a function of time is $ {\displaystyle s(t)} $ , then its average velocity over the time interval from $ {\displaystyle t_{0}} $ to $ {\displaystyle t_{1}} $

$$ {\displaystyle {\frac {\Delta s}{\Delta t}}={\frac {s(t_{1})-s(t_{0})}{t_{1}-t_{0}}}.}$$

The Greek letter $ {\displaystyle \Delta } $ (delta) is used to mean "change in". A positive average velocity means that the position coordinate $ {\displaystyle s} $ increases over the time interval in question aand a negative average velocity indicates a net decrease over the same time interval. The common notation for the instantaneous velocity is to replace $ {\displaystyle \Delta } $ with the symbol $ {\displaystyle d} $ , for example,

$$ {\displaystyle v={\frac {ds}{dt}}.} $$

This denotes that the instantaneous velocity is the derivative of the position with respect to time. The instantaneous velocity can be defined as the limit of the average velocity as the time interval shrinks to zero:

$$ {\displaystyle {\frac {ds}{dt}}=\lim _{\Delta t\to 0}{\frac {s(t+\Delta t)-s(t)}{\Delta t}}.} $$

Acceleration is the derivative of the velocity with respect to time and can likewise be defined as a limit:

$$ {\displaystyle a={\frac {dv}{dt}}=\lim _{\Delta t\to 0}{\frac {v(t+\Delta t)-v(t)}{\Delta t}}.} $$

Consequently, the acceleration is the second derivative of position and can be expressed as $ {\displaystyle {\frac {d^{2}s}{dt^{2}}}} $ Position, when thought of as a displacement from an origin point, is a vector: a quantity with both magnitude and direction. Velocity and acceleration are vector quantities as well. The mathematical tools of vector algebra provide the means to describe motion in two, three or more dimensions. Vectors are often denoted with an arrow, as in $ {\displaystyle {\vec {s}}} $ , or in bold typeface, such as $ {\displaystyle {\bf {s}}} $ . In physics, a force is an influence that causes the motion of an object with mass to change its velocity, i.e., to accelerate. It is measured in the SI unit of newton (N) and represented by the symbol F. Newton's First Law: The modern understanding of Newton's first law is that no inertial observer is privileged over any other. The concept of an inertial observer makes quantitative the everyday idea of feeling no effects of motion. The principle expressed by Newton's first law is that there is no way to say which inertial observer is "really" moving and which is "really" standing still. Newton's Second Law: The change of momentum of an object is proportional to the force impressed; and is made in the direction of the straight line in which the force is impressed. The momentum of a body is the product of its mass and its velocity:

$$ {\displaystyle {\vec {p}}=m{\vec {v}}\,.} $$

Newton's second law states that the time derivative of the momentum is the force:

$$ {\displaystyle {\vec {F}}={\frac {d{\vec {p}}}{dt}}\,.} $$

If the mass $ {\displaystyle m}$ does not change with time, then the derivative acts only upon the velocity, and so the force equals the product of the mass and the time derivative of the velocity, which is the acceleration:

$$ {\displaystyle {\vec {F}}=m{\frac {d{\vec {v}}}{dt}}=m{\vec {a}}\,.} $$

As the acceleration is the second derivative of position with respect to time, this can also be written: Newton's Third Law: Newton's third law is a statement of the conservation of momentum. The latter remains true even in cases where Newton's statement does not, for instance when force fields as well as material bodies carry momentum, and when momentum is defined properly, in quantum mechanics as well. In Newtonian mechanics, if two bodies have momenta $ {\displaystyle {\vec {p}}_{1}} $ and $ {\displaystyle {\vec {p}}_{2}} $ respectively, then the total momentum of the pair is $ {\displaystyle {\vec {p}}={\vec {p}}_{1}+{\vec {p}}_{2}} $ , and the rate of change of $ {\displaystyle {\vec {p}}} $ is:

$$ {\displaystyle {\frac {d{\vec {p}}}{dt}}={\frac {d{\vec {p}}_{1}}{dt}}+{\frac {d{\vec {p}}_{2}}{dt}}.} $$

By Newton's second law, the first term is the total force upon the first body, and the second term is the total force upon the second body. If the two bodies are isolated from outside influences, the only force upon the first body can be that from the second, and vice versa. By Newton's third law, these forces have equal magnitude but opposite direction, so they cancel when added, and $ {\displaystyle {\vec {p}}} $ is constant. Alternatively, if $ {\displaystyle {\vec {p}}} $ is known to be constant, it follows that the forces have equal magnitude and opposite direction. Lagrangian mechanics is a formulation of classical mechanics founded on the stationary-action principle (also known as the principle of least action). Lagrangian mechanics describes a mechanical system as a pair $ {\textstyle (M,L)} $ consisting of a configuration space $ {\textstyle M} $ and a smooth function $ {\textstyle L} $ within that space called a Lagrangian. In Newtonian mechanics, the equations of motion are given by Newton's laws. The second law "net force equals mass times acceleration",

$$ {\displaystyle \sum \mathbf {F} =m{\frac {d^{2}\mathbf {r} }{dt^{2}}}} $$

applies to each particle. For an $ {\textstyle N} $ particle system in 3 dimensions, there are $ {\textstyle 3N} $ second order ordinary differential equations in the positions of the particles to solve for. Instead of forces, Lagrangian mechanics uses the energies in the system. The Lagrangian is a function which summarizes the dynamics of the entire system. Overall, the Lagrangian has units of energy. Any function which generates the correct equations of motion, in agreement with physical laws, can be taken as a Lagrangian. It is nevertheless possible to construct general expressions for large classes of applications. The non-relativistic Lagrangian for a system of particles in the absence of a magnetic field is given by:

$$ {\displaystyle L=T-V} $$

where

$$ {\displaystyle T={\frac {1}{2}}\sum _{k=1}^{N}m_{k}v_{k}^{2}} $$

If $ {\displaystyle T} $ or span class="math display">$ {\displaystyle V} $

Part 2.3: Maxwell's Relations

Part 2.4: Heat Capacity

Heat capacities of a homogeneous system: At constant pressure, heat supplied to the system contributes to both the work done and the change in internal energy, according to the first law of thermodynamics. The heat capacity, at constant pressure, is called $ {\displaystyle C_{p}} $ and defined as:

$$ {\displaystyle C_{p}={\frac {\delta Q}{dT}}{\Bigr |}_{p=const}} $$

From the first law of thermodynamics follows $ {\displaystyle \delta Q=dU+pdV} $ and the internal energy as a function of $ {\displaystyle p} $ and $ {\displaystyle T} $ is:

$$ {\displaystyle \delta Q=\left({\frac {\partial U}{\partial T}}\right)_{p}dT+\left({\frac {\partial U}{\partial p}}\right)_{T}dp+p\left[\left({\frac {\partial V}{\partial T}}\right)_{p}dT+\left({\frac {\partial V}{\partial p}}\right)_{T}dp\right]} $$

For constant pressure $ {\displaystyle (dp=0)} $ the equation simplifies to:

$$ {\displaystyle C_{p}={\frac {\delta Q}{dT}}{\Bigr |}_{p=const}=\left({\frac {\partial U}{\partial T}}\right)_{p}+p\left({\frac {\partial V}{\partial T}}\right)_{p}} $$

At constant volume: A system undergoing a process at constant volume implies that no expansion work is done, so the heat supplied contributes only to the change in internal energy. The heat capacity obtained this way is denoted $ {\displaystyle C_{V}.} $ The value of $ {\displaystyle C_{V}} $ is always less than the value of $ {\displaystyle C_{p}.} $ ($ {\displaystyle C_{V} < C_{p}.} $ )

Expressing the internal energy as a function of the variables $ {\displaystyle T} $ and $ {\displaystyle V} $ gives:

$$ {\displaystyle \delta Q=\left({\frac {\partial U}{\partial T}}\right)_{V}dT+\left({\frac {\partial U}{\partial V}}\right)_{T}dV+pdV} $$

For a constant volume process $ {\displaystyle dV=0} dV=0) $ the heat capacity reads:

$$ {\displaystyle C_{V}={\frac {\delta Q}{dT}}{\Bigr |}_{V={\text{const}}}=\left({\frac {\partial U}{\partial T}}\right)_{V}} $$

The relation between $ {\displaystyle C_{V}} $ and $ {\displaystyle C_{p}} $ is then:

$$ {\displaystyle C_{p}=C_{V}+\left(\left({\frac {\partial U}{\partial V}}\right)_{T}+p\right)\left({\frac {\partial V}{\partial T}}\right)_{p}} $$

Calculating $ {\displaystyle C_{p}} $ and $ {\displaystyle C_{V}} $ for an ideal gas:

$ {\displaystyle C_{p}-C_{V}=nR.} $

$ {\displaystyle C_{p}/C_{V}=\gamma ,} $

where

Part 2.5: Latent Heat

The specific latent heat of condensation of water in the temperature range from −25 °C to 40 °C is approximated by the following empirical cubic function:

$$ {\displaystyle L_{\text{water}}(T)\approx \left(2500.8-2.36T+0.0016T^{2}-0.00006T^{3}\right)~{\text{J/g}},} $$

where the temperature $ {\displaystyle T} $ is taken to be the numerical value in °C.Part 2.6: Adiabatic Processes

Section Three: Classical Mechanics

Part One: Newtonian Mechanics

Part Two: Legrangian Mechanics

With these definitions, Lagrange's equations of the first kind are: $$ {\displaystyle {\frac {\partial L}{\partial \mathbf {r} _{k}}}-{\frac {\mathrm {d} }{\mathrm {d} t}}{\frac {\partial L}{\partial {\dot {\mathbf {r} }}_{k}}}+\sum _{i=1}^{C}\lambda _{i}{\frac {\partial f_{i}}{\partial \mathbf {r} _{k}}}=0} $$ where $ {\displaystyle k = 1, 2, ..., N} $ labels the particles, there is a Lagrange multiplier $ {\displaystyle λ_i} $ for each constraint equation $ {\displaystyle f_i } $ , and $$ {\displaystyle {\frac {\partial }{\partial \mathbf {r} _{k}}}\equiv \left({\frac {\partial }{\partial x_{k}}},{\frac {\partial }{\partial y_{k}}},{\frac {\partial }{\partial z_{k}}}\right)\,,\quad {\frac {\partial }{\partial {\dot {\mathbf {r} }}_{k}}}\equiv \left({\frac {\partial }{\partial {\dot {x}}_{k}}},{\frac {\partial }{\partial {\dot {y}}_{k}}},{\frac {\partial }{\partial {\dot {z}}_{k}}}\right)} $$

Part Three: Hamiltonian Mechanics